Tech

What is going on under the hood

A little history

Things evolved over the years, starting 2014, and it began with running

a fairly simple Django application on a single server.

Baremetal, native, no virtualization, no containers. No CI/CD and no automation.

Heck, not even tests.

Initially, for the fun of it.

A few years earlier, I made contact with web development. That was around 2009.

I was tasked with maintaining a PHP application that would render HTML via XSLT.

Back then, I had a feeling that things should be less cumbersome and unfriendly.

Approximately 2013, I got caught by curiosity and tried to find a technical ecosystem that would appeal to me.

Spring MVC looked intriguing - from a distance. Back then, I thought of it as too heavy for my needs.

At that time, I was looking out for something more pragmatic. Less memory hungry, more expressive.

I found Django, and I was hooked. I started to learn Python along the way.

To me, back then, the biggest competitor was Ruby on Rails. I felt that Python was more likely

to be of use in other areas, as well.

So, that's why this page is made with Django. I like the framework, and I like Python.

They served me well over the years.

I initially ran an apache web server as a reverse proxy in front of the Django application.

Update the page? Local testing, git commit & push, then checkout the latest version on the server.

Django has a thing for automatically restarting the server when

I initially ran an apache web server as a reverse proxy in front of the Django application.

Update the page? Local testing, git commit & push, then checkout the latest version on the server.

Django has a thing for automatically restarting the server when wsgi.py detects a change.

Some time around 2017, I learned about docker and containers. I started to containerize the Django application.

In the meantime, I had of course set up DNS and email services (postfix + dovecot). I think GitLab came in

around 2018, and I started to use it for source control and CI/CD.

Things just started to escalate from there. I started to containerize everything. I started to use kubernetes.

Sometimes, I got the opportunity to work for a company that would employ exactly the technology that interested me the most.

I picked up some great inspiration over the years, I subsequently started to set up an environment that

would usually be found in a company to serve a lot more developers than just me - I aim for scalability.

Dear visitor!

If you happen to find any content on this site that you find interesting, or that even helps you in your own projects and

your day job, that would be a great honor to me.

Current tech in the grid

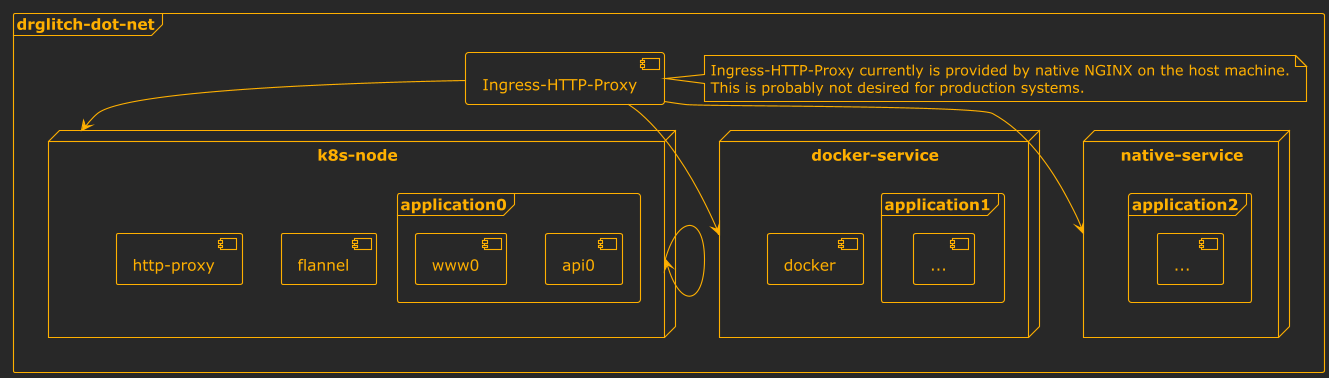

My current approach mandates to run every web application inside a container, usually orchestrated by kubernetes. Currently, my kubernetes cluster spans 14 nodes of different sizes and capacity. There are a few tasks that remain directly with certain baremetal machines: email, DNS and TLS termination. Also, the kubernetes cluster's worker nodes are mostly VMs running on multiple baremetal machines.

- Debian GNU/Linux, Ubuntu GNU/Linux

- NGINX (native, k8s)

- Let's Encrypt (native)

- postfix (native)

- dovecot (native)

- bind9 (native)

- wireguard (native)

- GitLab (native)

- docker (native)

- docker registry (docker)

- Nextcloud (docker)

- Jitsi (docker)

- Kubernetes (native, kubeadm)

- flux CD (k8s)

- Sonatype Nexus (k8s)

- Grafana (k8s)

- Prometheus (k8s)

- Keycloak (k8s)

- Kong (k8s)

- PostgreSQL (k8s)

- MariaDB (k8s)

- Redis (k8s)

Architecture and base concepts

From an architecture-centric perspective, there are of course a number of deficits that currently exist with my setup. For one, I only have a limited number of physical machines, which puts a restriction on the ability to scale out for resilience. Also, a real-life production scenario should not see an email server running on the same machine as the kubernetes master, for example. Nevertheless, I want the setup to be suitable for way larger installations and heavier requirements. Given the appropriate physical resources, it is straightforward and simple to remove these constraints and deficits. As I want to provide a more generic level of abstraction and aim to further isolate operational risk, I had decided to set up most worker nodes as virtual machines on my physical hosting. They are interconnected via a wireguard VPN, which is also used to connect to the kubernetes cluster.

Why yes, here's an architecture diagram:

Integration and Flow

GitLab is used for source control and CI/CD. The CI/CD pipeline builds docker images and pushes them to the docker registry. I usually bundle my applications as helm charts, which are then pushed to the Nexus repository. Flux CD makes sure to continuously rollout latest versions of the installed helm charts. A public variant of the Flux CD IaC project can be found on my GitLab

Node management

The kubernetes cluster was set up using kubeadm. The nodes are managed using fabric, which

I find a more lightweight, but definitely more "hackish" alternative to ansible.

Where ansible is mostly declarative, fabric is strictly imperative and boils down to mostly running shell commands remotely

on a swarm of machines.

The details can be found on my GitLab.

Application development

Nothing too fancy here. This website is still a Django application, running in high availability mode (3 replicas, that is). I try to keep up with the latest dependency versions. I think the largest maintenance tasks were the migration to Python 3 and the overall packaging as a docker image and helm chart. There is some static content with the page, therefore there are NGINX instances serving the static content, as well. The PostgreSQL database of the application is also running in high availability mode (3 replicas) and is managed by Zalando's Postgres Operator. Stylesheets are written in LESS and compiled ahead of time during the application build.